- 1 of 8

- ››

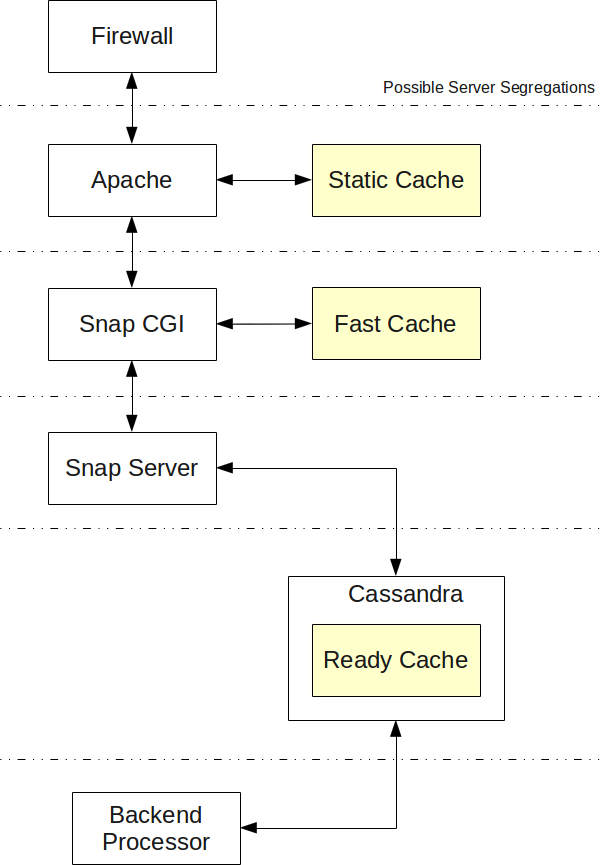

The cache is expected to be managed by the front end whenever the page being accessed does not yet exist on the server, and by the back end as it browses the database and detects pages that are not up to date.

There are several caching levels where a full page can be saved for immediate retrieval at a later time. There are also caching levels of data to be retrieved from the database based on dependencies (whether a dependency changes or not, the cache is to be recomputed or not.)

The figure shows the 3 potential caches:

This cache include all static files such as JavaScript, images, CSS, XML, etc. that are not generated by the server.

This cache was generated by the server and requires complex decoding of the URL and other request options (i.e. language) to determine whether it is available or not and therefore it is done by our tool instead of Apache. This being said, some of the computation may require full access to the Cassandra server (TBD.) We may otherwise need to have parameters that define each Fast Cache.

Also the fast cache needs to be managed in a way such that it gets cleared if the status of a page changes (which is problematic if you have 20 machines running and have 20 similar caches...)

This cache is also static and may be refresh either by timing out or just and only by being re-created by the backend processor.

The Ready Cache resides in the Cassandra database. Whenever a page request is received, we first check to see whether the page is cached. If so, we return the cached version to Snap.cgi that will then save it in its Fast Cache.

The Ready Cache is created by the Backend Processor on a permanent basis, although if not yet available when the front end needs it, we create it with the front end.

Note that the Snap CGI connects to the Snap Server. In other words, we are adding a TCP network connection between the Apache/Snap CGI and the backend that generates the HTML pages. This means you already have a Proxy Server and do not need to use Apache to create such.

At some point the Snap CGI will be capable of Load Balancing using different schemes such as Round Robins or Load Average of the available Snap Servers, and as mentioned below, Cache Balancing (i.e. access server A if accessing data of type X, access server B if accessing data of type Y, etc.) As we move forward, the Proxy capability can be a mix of all the available features (i.e. try the next server in a Round Robin manner that has a Load Average under a given level and that handles data of type X.)

The HTTP 1.1 protocol introduced an ETag response header and If-None-Match query header. These can be used instead of just the URI & last modification time of the header to know whether a resource changed. It can make it easier, although the ETag may actually just the the modification time of the resource.

This could prove useful for fast changing pages. A caching mechanism that allows a page to be cached for say 5 minutes minimum can use an ETag of:

modification time in seconds / 300

With the drawback that all the fast changing pages would eventually be rebuilt at exactly the same time, although it is an extremely simple algorithm and thus easy to tweak to avoid that problem.

Data that gets cached comes with a (potentially large) number of parameters.

Each page getting cached needs to include all the parameters because next time we get a hit, that page can only be returned to people who have the exact same parameters.

Example of parameters:

Notice that if your website takes the user device, resolution, browser, protocol, language options in account and you support 10 devices, 5 resolutions, 3 browsers, 2 protocols, 10 languages, then you get a whooping 10 × 5 × 3 × 2 × 10 = 3,000 possibilities per page. Outside of the fact that you'd have a hard time to test them all, it also means a potential for 3,000 files cached per page on your website! With a 1,000 pages website, you'd have up to 3 million HTML files in your cache.

Although the large number of files to create in a cache can look scary, to better serve users, it is wise to send users with a given set of parameter to access a specific server (assuming that you can create X sets of about equal size based on parameters) then you can hit a given cache with only one subset of the large amount of cached files.

For example, say you can check for HTTPS and the type of device the user has. Based on those two parameters, you could "cache balance" requests by sending users to specific servers that are likely to already have the page available in their fast cache!

The cache absence in one server can be a bonus for another server. Assume server A receives a request for page X right before server B. Server A can create a lock and get server B to actually wait for the Ready Cache instead of both A and B creating that same page. That way B can answer other requests since that specific process just fell asleep.

I think it is possible to create such a lock with the Cassandra database using a consistency level of ALL, at least for the read.

Assuming it is possible to return the data directly to a user from any one of our servers, a good idea is to compute a small request and ask all the servers whether they have that data cached. If so, we ask the one computer for a copy of its cache and return that data immediately.

To make this cache part of a proactive system, you want to have your backend servers that keep files in their fast cache to tell the proxy server that they have said cache and for how long they will hold it. Then any further requests to the proxy server first check the status of the caches and return the corresponding server.

In order to know what to cache, each website shall count hits on each page. A page being hit once in a while (i.e. once an hour or less) is most certainly a waste for the cache assuming that the rest of the server flows nicely already.

So having statistics to mark which pages should be cached is a great idea.

Statistics should go on all the different parameters used by the site. For example, if you get hit once a day by a cell phone, then there is no need to cache data for cell phones. If you get hit 1,000 per hour by cell phones, then caching for cell phones is worth the trouble. All the parameters that are used by a website need to be used by the cache to determine whether it is useful to cache a page (or at least to determine how long a new page should be kept in the cache... if you get 1,000 hits on a page per second, then caching becomes a necessity and a page could be getting many hits all of a sudden just because someone shared it on the Internet.)

When accessing a page, the server needs to compute the permissions for the user and for the page, then compute the intersection of those two groups of permissions. That information is saved in the cache table of the Cassandra.

This cache gets reset whenever the corresponding pages are modified: the page concerned itself, the page type permissions, the permission tables, etc. At this time, this works rather well. It is not clear whether it is indeed working 100% of the time or not, but I have not had any problems with this concept.

Dynamic websites are by definition dynamic. This means they have the bad habit of changing depending on parameters that are checked at the time the website is hit.

There are reasons for which we'd want to avoid using static caches. The main reasons are:

NOTE

Assuming you support 10 devices, 10 options and have 4 different statuses for a page, that's 400 possible ways the same page can be generated (assuming you have 1,000 pages on your site, your cache could grow to 400,000 HTML files!) Unless your site is being hit many times, this renders some of the caches totally useless since chances to return the same HTML file twice are slim.

The only real way to avoid problems with caches is to access the database each time and check the current status to determine whether a cached version exists. Yet, this is obviously contradictory to having fast caches in the first place.

Non-HTML data (i.e. JavaScript files, CSS, images, PDF files, flash animations, etc.) can generally be served ASAP from Apache (at least for publicly available data, see below.)

HTML data is the part that's dynamic and needs a check. Actually, it could be many checks as that data could be dynamic depending on which user is logged in, which device is used, which language they are using, what the status of the site is, what the status of that page is (it could just have been unpublished!)

To Be Honest

Assuming that everything is programmed properly, a change to the content itself has the side effect of clearing all the attached caches. This means the only problem is dependencies (i.e. if you change Page A and a user decided to show that page inside Page B, whenever you modify Page A, you need to clear the caches of Page B. Pretty much all cases can be handled in this way. See talk about dependencies for more information.)

See also: Dependencies

To continue on with the dynamism of CMS websites, some pages can be defined to be temporary. For example, maybe you're offering a product for a hefty discount (say 30% off.) That discount may be very temporary (say from Feb 25th till Mar 5th.) In that case, any page that displays this discount must be refreshed on Feb 25th to include the offer and again on Mar 6th to remove the offer.

The scheduler can be used to determine whether a page is up to date or not and to tell when the page needs to be taking out of caches.

Static variables (defined in a function) and local variables (defined in an unnamed namespace) can be used to cache data while running an instance of the server. This can be used to cache data that does not change between calls to a function or set of functions.3

However, static variables can be extremely treacherous. Remember that only the functions that have access to the static cache will be able to clear it. Also, it puts the responsibility of clearing the cache on ALL the other parties.

For example, the User plug-in wants to cache the list of users.

A special module is created to ask questions to a user (a survey of some kind) and at the end that module offers the user to create an account. It doesn't use the default user account registration form because it already has a lot of the necessary information and thus wants to simplify the form.

Once the special module created the new user, the user plug-in knows nothing about that new user and cannot deal with the requests it receives from that special module. The static cache hides the real data and the new user information.

Anything being cached shall use the libQtCassandra system which can be used as a memory cache. Whenever something is modified, the corresponding signal can then be used to either clear the cache or update whatever needs to be updated (i.e. in case of our users, whenever you save a new users, it can easily also be saved in the libQtCassandra memory cache.)

When Apache receives a request to load a binary file (i.e. image, PDF file, etc.) then it first checks to see whether the file is available on disk in the public folder of the website.

Something similar to these two lines of Apache code will do the trick:

RewriteCond <public folder>/<filename> -s RewriteRule <public folder>/<filename> [L,QSA]

When the request for a public file fails, the request is forwarded to the Snap! Websites server which retrieves the file from the database and save it in the public folder for next time4.

The -s on the RewriteCond is important because -f would return the file even if still empty. That way we avoid most access to a file that's not yet fully there. To still ensure even more success, we want to save the file in a hidden folder and then move it to the public folder at once. This is very fast and we avoid problems were Apache sends a partial file to a browser.

Public data can directly be handled by Apache. Private data, however, requires a check of the user to make sure that he or she has access to said data.

For this reason, private data is passed to the Snap! Websites server which first checks to see whether the data is accessible. If so, it uses the copy available in the private folder. If there is no such copy, then it retrieves the data from the Cassandra database first. Finally, the first process that obtain a lock to save that file in the private folder does so.

Snap! Websites

An Open Source CMS System in C++